AI Smart: Navigating the Ethical Labyrinth of Intelligent Systems

The concept of “Ai Smart” permeates our modern lives, from the virtual assistants on our phones to the complex algorithms shaping global finance. But as artificial intelligence becomes increasingly sophisticated, so too does the ethical landscape we must navigate. This article explores the multifaceted implications of “ai smart,” emphasizing responsible innovation and societal impact.

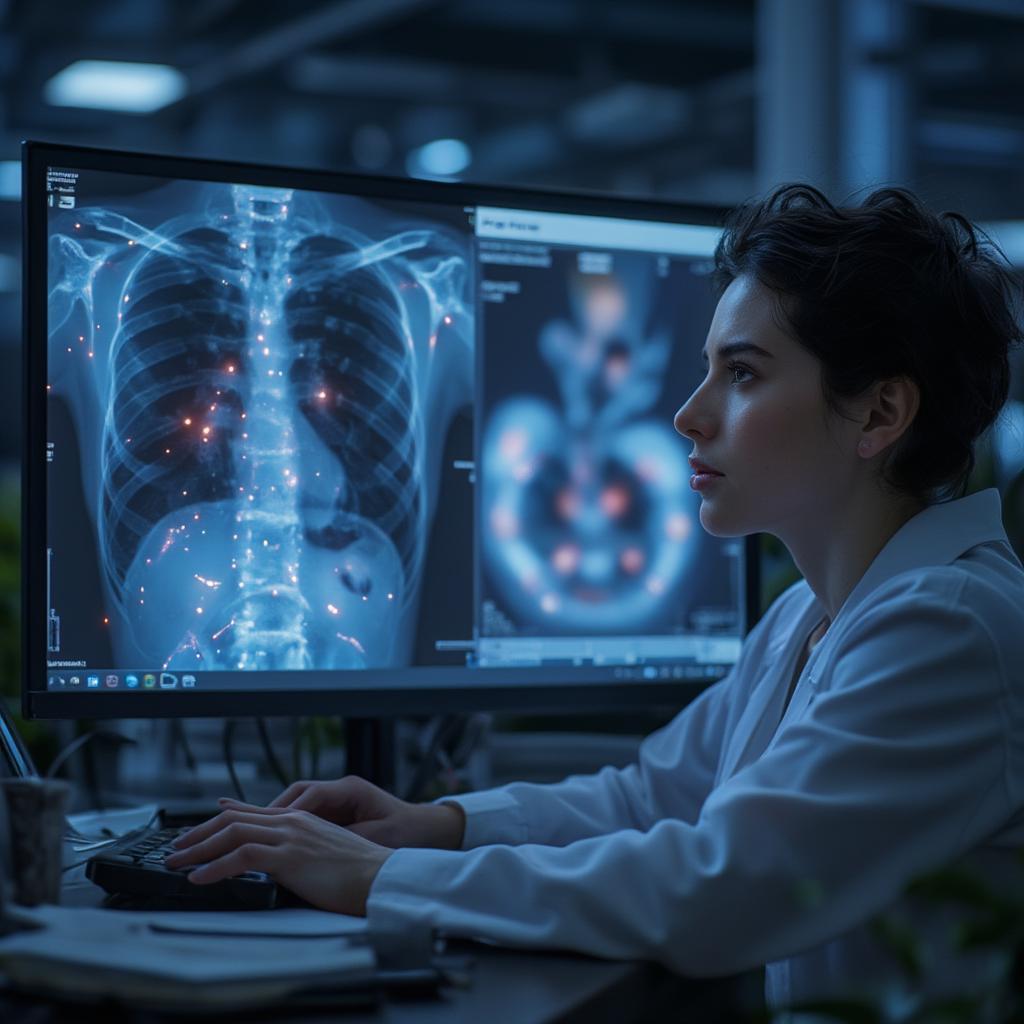

The allure of “ai smart” lies in its promise of efficiency, precision, and unprecedented problem-solving capabilities. Imagine self-driving cars navigating complex traffic patterns or AI diagnosing diseases with accuracy surpassing human doctors. These possibilities are no longer science fiction, but rather rapidly approaching realities. Yet, alongside these advancements, crucial questions arise: How do we ensure fairness and avoid biases in algorithms? How can we protect privacy in a world where data fuels AI? And ultimately, how can we harness the power of “ai smart” for the betterment of humanity? Before diving deep, let’s establish a framework that guides us through the ethical considerations.

Understanding the Core of “AI Smart”: Beyond the Hype

What exactly constitutes “ai smart”? At its core, it signifies the ability of AI to learn, adapt, and perform tasks that typically require human intelligence. This encompasses everything from pattern recognition and natural language processing to complex decision-making and creative problem-solving. However, this “smartness” is not inherently neutral; it reflects the data it’s trained on and the biases of its creators. This is where we, as a society, must proactively engage and set boundaries to ensure these technologies are working for the betterment of society.

Consider, for instance, facial recognition systems. While incredibly useful for security purposes, these systems have been shown to exhibit higher error rates when identifying individuals from certain racial or ethnic backgrounds. This is not because AI is inherently racist, but rather, it is a result of biases that can inadvertently be built in during the training process. Thus, understanding that “ai smart” is a tool that can be manipulated with unintended consequences is paramount. This isn’t to say that we should shy away from such powerful technology; rather, it’s a call for vigilance and a strong ethical compass to ensure its responsible use.

Ethical Challenges Posed by AI Smart

The deployment of “ai smart” comes with a range of ethical challenges. Here, we’ll delve into several critical areas that demand our attention.

Bias and Discrimination in AI Algorithms

Perhaps one of the most pressing concerns is the potential for AI to perpetuate or even amplify existing societal biases. Because AI algorithms are trained on large datasets, if these datasets reflect biases present in the real world, the AI will inevitably reproduce and potentially exacerbate those biases. This means an AI used to make decisions about loan applications might unfairly reject individuals from disadvantaged communities, or an AI system used for hiring might exclude qualified candidates based on factors like gender or ethnicity.

To mitigate this challenge, we need to ensure that data used to train AI is diverse, representative, and meticulously checked for bias. Moreover, the algorithms themselves must be designed with fairness and equity as guiding principles. “The future of AI is not just about its capabilities but about ensuring it aligns with our values,” states Dr. Eleanor Vance, a leading AI ethicist. “We need to move beyond the ‘black box’ approach and demand transparency in how these systems work.”

The Privacy Implications of AI Smart

The power of “ai smart” hinges on the availability of massive amounts of data. This raises serious concerns about privacy and data security. As AI systems become more pervasive, they collect vast quantities of personal information, from our browsing habits and social media interactions to our health records and financial transactions. This data, if not handled properly, can be vulnerable to misuse, whether through hacking, unauthorized surveillance, or simply by being used for purposes we did not consent to. Finding the right balance between leveraging data for innovation and protecting individual privacy is a complex, but essential part of the journey as we continue to progress into a future dominated by “ai smart”.

Consider the applications of AI in healthcare. While AI can accelerate diagnosis and personalize treatments, it also requires access to sensitive patient data. We must ask: how can we ensure this data is used responsibly and securely? What rights do patients have regarding how their data is handled? It’s imperative to establish robust privacy frameworks and data governance policies to safeguard individuals’ fundamental rights and freedoms. These frameworks must be flexible and continuously adapt to changes in technology and society. We must move beyond simply asking how much data can we collect, to asking should we collect this data, and can we do so ethically?

Transparency and Explainability in AI Smart

As AI becomes more sophisticated, its decision-making processes often resemble a “black box” – opaque and difficult to understand. This lack of transparency is problematic, especially in contexts where AI’s decisions have significant consequences. For example, if an AI is used to determine parole for prisoners, or to evaluate credit risk, or even to monitor the performance of a power grid, we need to be able to understand how it arrived at its conclusions. When a system operates without full understanding, we risk accepting decisions we may not agree with or even understand, and worse, allow those decisions to affect our lives.

Explainable AI (XAI) is an emerging field that aims to make AI more transparent and interpretable. XAI seeks to reveal the logic behind AI decisions, allowing us to audit and validate these processes. Developing XAI technologies is crucial for building trust in “ai smart” systems and ensuring that these technologies can be held accountable for their actions. We can’t just blindly trust the decisions of an AI system; it is vital we understand the logic, parameters and data, to make informed decisions about the application of AI and whether or not it should be utilized in a given use case.

The Impact of AI on Jobs and the Economy

The proliferation of “ai smart” is also raising concerns about the future of work. While AI has the potential to automate many mundane and repetitive tasks, it also has the power to displace a significant number of jobs across various sectors. For instance, a rise of automation in manufacturing may lead to the displacement of many blue-collar jobs, while automated writing tools may impact journalism, copywriting and education. It is important to remember, however, that technological advances do not simply destroy jobs, they also create new opportunities.

Navigating this transition requires proactive efforts to retrain workers and adapt educational systems. We need to equip people with the skills they need to thrive in an AI-driven economy. Moreover, the government and industry must collaborate to ensure a just transition that protects workers’ rights and promotes inclusive growth. It is essential that we collectively, and with significant foresight, prepare for a future where human skills and AI skills work in harmony.

Towards Responsible AI: Guiding Principles

As we integrate “ai smart” into more areas of our lives, it’s critical that we actively work towards the responsible development and use of these technologies. Here are some guiding principles that can help us:

- Fairness and Equity: AI should not perpetuate or amplify existing societal biases. We must ensure that AI systems are designed and deployed in a way that treats everyone fairly and equitably. This means rigorous testing for bias, diverse training data, and transparent algorithm design.

- Transparency and Explainability: AI should not operate as a “black box.” We need to understand how AI systems make decisions, especially when those decisions have significant consequences. Transparency and explainability are essential for building trust and accountability.

- Privacy and Security: We must protect individuals’ privacy and ensure that data collected by AI is handled responsibly and securely. This means implementing robust data governance policies and respecting individuals’ fundamental rights.

- Accountability and Responsibility: When things go wrong, there must be clear lines of accountability. We must establish mechanisms to hold AI systems and their designers accountable for their actions. “We can’t expect to just release AI and then let it go,” comments Amelia Rodriguez, a renowned researcher in human-centered AI. “Ethical development is a continuous process, not a one-off event.”

- Human Oversight and Control: AI should augment human capabilities, not replace them entirely. Humans must retain control over critical decisions and maintain the ability to override AI decisions when necessary. The focus should not be on AI replacing humans, but on human skills working in harmony with AI.

To achieve these principles, we must involve stakeholders from diverse backgrounds, including ethicists, policymakers, technologists, and the general public. The development and deployment of “ai smart” should not be left solely to the technology experts; it should be a collective endeavor.

How Can We Ensure AI Smart Is Used for Good?

The question then becomes, how do we implement these principles in practice? It requires a multi-pronged approach involving the following key elements:

- Education and Awareness: Fostering public awareness about the potential benefits and risks of “ai smart” is paramount. Education and public discourse can help ensure that AI innovation aligns with our societal values.

- Policy and Regulation: Policymakers have a key role to play in establishing ethical guidelines and regulations for AI development and deployment. This includes developing robust data protection laws and addressing bias in AI algorithms.

- Industry Collaboration: The tech industry must take a proactive role in building responsible AI systems. This includes prioritizing ethical concerns, adopting fair practices, and fostering a culture of accountability.

- Research and Innovation: Continuing research into XAI, bias detection, and privacy-enhancing technologies is vital. Innovation should not solely focus on performance but also on building ethical and responsible AI. For more on the applications of AI within a specific field, you can explore ai in smart cities to see how these principals work in action.

- Global Cooperation: Given the global nature of AI, collaboration across borders is essential. International standards and agreements can help ensure that AI development aligns with universal values.

The path to responsible AI is not easy, but it is essential for ensuring a future where AI enhances, rather than compromises, human well-being. We must work together to ensure the power of “ai smart” is used to create a more just, equitable, and prosperous world for all.

The Future of AI Smart: A Shared Responsibility

The future of “ai smart” is not predetermined; it is a future that we are actively creating. We have a responsibility to shape this future by embracing innovation while simultaneously prioritizing our core values. By actively engaging in thoughtful dialogue, we can harness the power of “ai smart” for the betterment of humanity. The goal is not to limit innovation, but rather to guide it in a direction that promotes fairness, transparency, and accountability.

For more information on the financial aspects of AI, you can look at gpt ai stock or ai startup stocks. The choices we make today will have a profound impact on the future of “ai smart,” and we must commit to ensuring that these technologies are used ethically and responsibly. This demands a commitment to not only innovation but to ethical oversight as well.

The ethical considerations surrounding “ai smart” are not just a technical challenge, they’re a societal challenge that requires us to engage in honest, open discussions and make bold choices that will impact our future. We must commit to building a world where AI empowers, rather than undermines, the human experience. Just as we would not turn a blind eye to any technology’s shortcomings, we cannot do so with AI. The path to true “ai smart” is not just about intelligence, but about how it serves humanity. As we look towards the future, remember this fundamental truth.

To further understand the impact AI can have across various fields, you can also explore topics like ai in health and safety and best artificial intelligence stocks 2022. The key takeaway is that a future with true “ai smart” is only possible with constant vigilance.